Arriving with iOS 18.2 will Apple’s latest AI (Apple Intelligence) tool Visual Intelligence. The most recent beta version arrived and has numerous other features. Visual Intelligence converts your camera into an All- Seeing Eye tool driven by Artificial Intelligence. Similar to Google Lens. Here is all you need to know on leveraging Visual Intelligence on an iPhone running iOS 18.2 or later to maximise your experience.

Visual intelligence : What is it?

Visual Intelligence is a vision-based AI that lets you point your camera at objects to learn more about them or complete quick tasks; Siri is a voice-based AI that responds to your questions.

Visual Intelligence, for example, retrieves Apple Maps’ rating and hours of operation when you shooting your iPhone at a restaurant. Possibly even their food. By immediately recommending to add the event to your Calendar or receive directions to the place, scanning an event flyer saves you the time of manually entering the data.

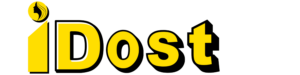

Just point your camera at anything to get information using ChatGPT or Google’s search for comparable images. For example, you might find the name of a product to purchase online or determine the breed of an animal or plant.

Consider Visual Intelligence as a somewhat combined form of Google Lens with some original Apple tweaks. But since the iPhone 16 series uses the A18 chip, this capability is unique to that series.

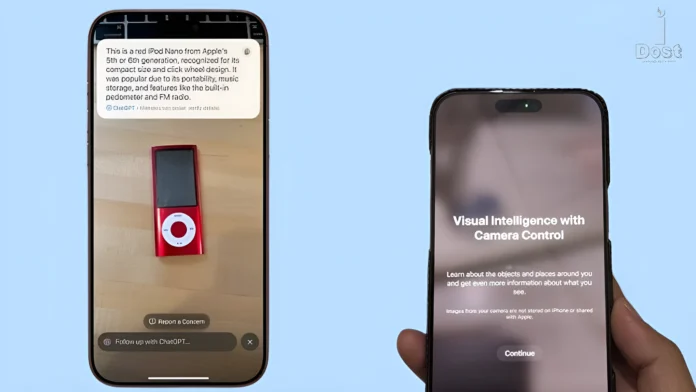

Methods of Activating Visual Intelligence

On the iPhone 16, Visual Intelligence is directly included into the Camera Control function. Currently in development beta 1, the iOS 18.2 update is expected to be rolled out by the end of the year and contains Using it will either need waiting for the stable release or switching to the beta channel and updating to iOS 18.2.

- Long Press the New Camera Control Button. This will create a camera-style interface including a Capture Button and a Preview.

- Once Active, point your Camera towards something you wish to hunt for and Tap the capture Button to start Visual Intelligence.

- Once triggered, Visual Intelligence makes recommendations based on the image’s content. Should the image include a date, it will suggest adding it to your calendar; should it identify text, it will offer to summarise the material.

- Whichever the image’s content, you will always have two choices. Question and Search.

- Ask: Upload the Picture to ChatGPT so you may Discuss and Learn More about it.

- Search: Google searches the picture looking for like images or product links to buy.

Read Also : How to Turn ON or OFF Efficiency Mode in Windows 11, Here’s Best Solution

How might one apply Visual Intelligence in daily life?

- Point your iPhone at a landmark; Visual Intelligence will show its name, background information, visiting hours, and adjacent facilities.

- Point your iPhone’s camera towards a book cover or product, then use the Search option to locate purchase links, reviews, and other pertinent information.

- Point your iPhone towards a plant or animal to find the species using the Ask or Search option. You will find further material including habitat specifics, species traits, or care advice.

- Point your iPhone at an event poster; Visual Intelligence will record the event name, time, date, and location and let you add it straight to your Calendar.

- If you come across long text, point your camera at it to create a fast summary, or use ChatGPT to simplify it.

Though it has few shortcomings, Visual Intelligence is rather a useful tool. Google Lens, for instance, features a video recording tool that lets you capture a video then run a search. Beneficial if the subject is too large for a picture. Apple might include it in next upgrades.